Here's Why I Still Don't Buy the Hype of Google Gemini

Gemini might not be as good as it seems to be.

Google’s Gemini was just unveiled, and I haven’t seen so much hype since ChatGPT was released by OpenAI.

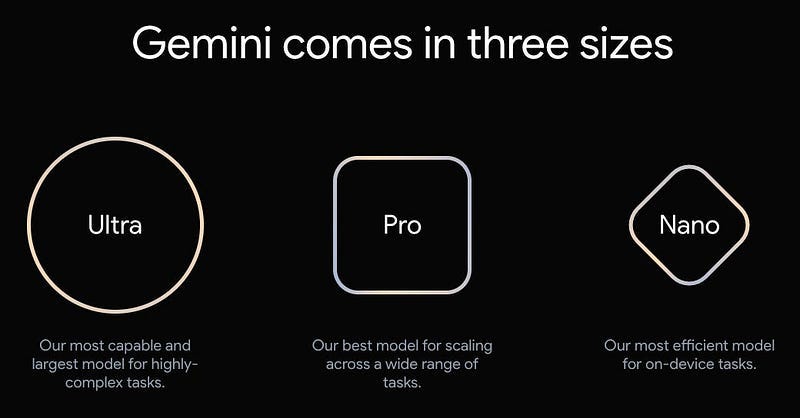

Gemini is Google’s most powerful AI model and what makes it different from others is its multimodality. Traditionally, achieving multimodality involved using different models trained for specific tasks separately (text, image, etc). Howe…