How Google Quietly Took the Lead in the AI Race with Gemini 2.5

This model isn’t just smart on paper

Something feels different this time—it doesn't seem like just another failed launch from Google. I don’t want to downplay the past efforts of the DeepMind team, but the truth is, they didn’t always meet user expectations.

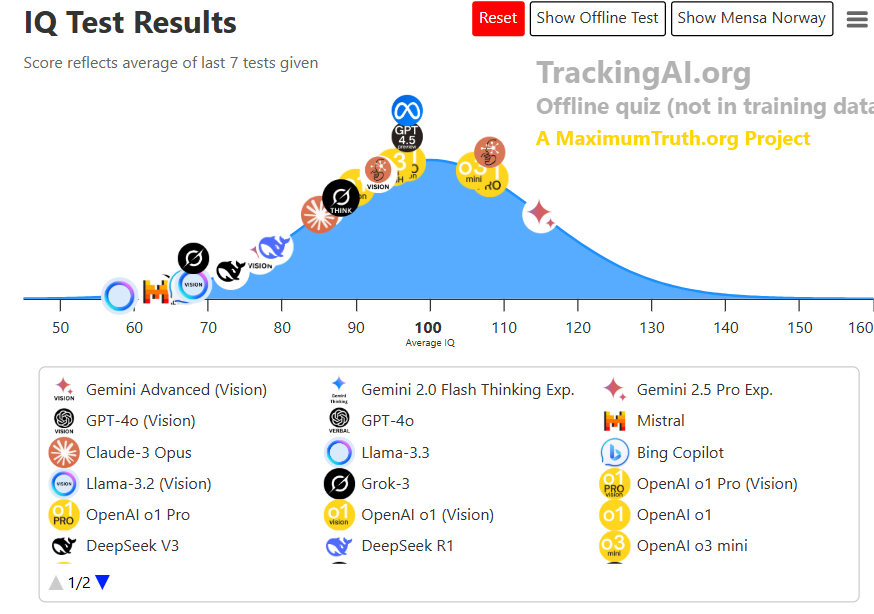

Just a few weeks ago, Google released Gemini 2.5 Pro, and the internet lit up. Maybe not quite as explosively as with D…