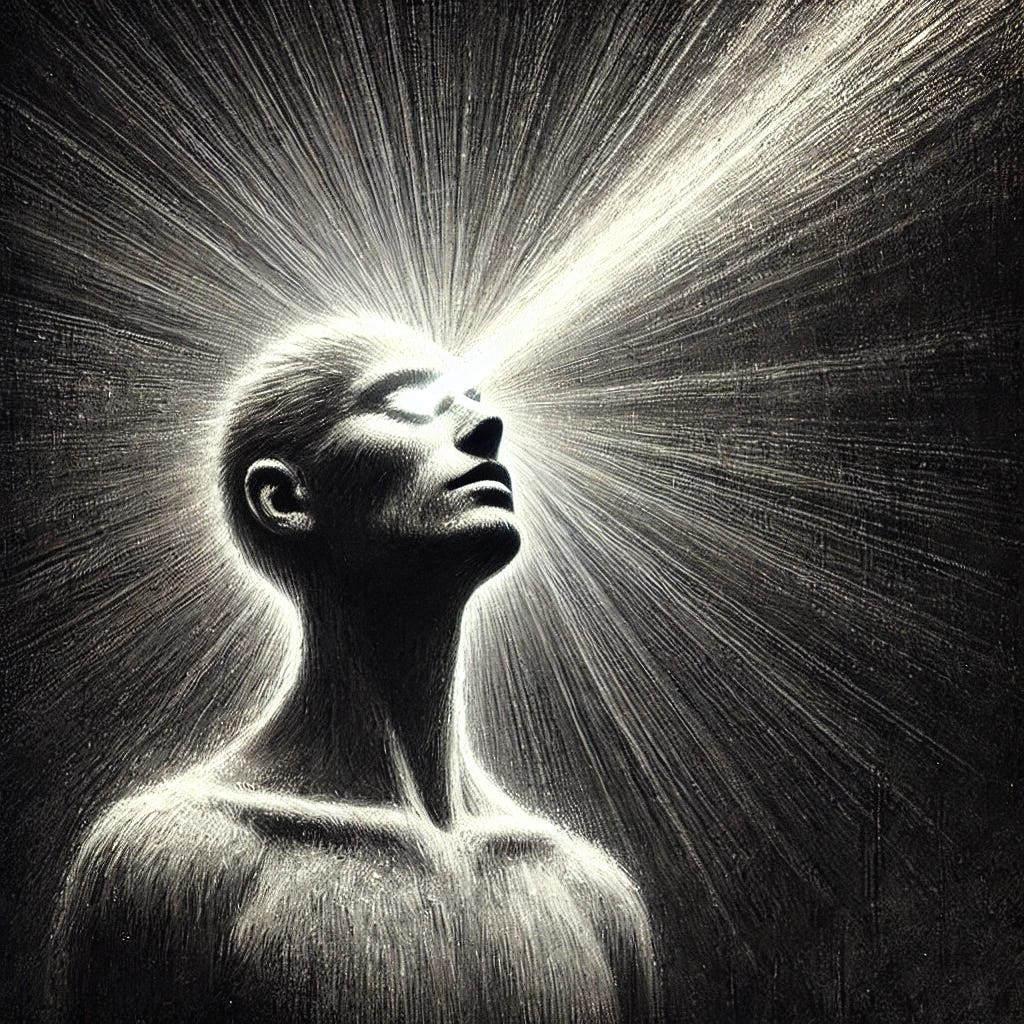

Sharper Machines, Duller Minds: The Paradox of AI Intelligence

How AI is reshaping human intelligence

We’ve just seen a big week of AI developments. Musk launched Grok 3, Google introduced AI Co-Scientist, and rumors about OpenAI’s GPT-5 release are picking up.

One of the most notable aspects of these launches is how Musk described Grok 3—calling it “scarily smart”

To be fair, Grok 3 is truly impressive. It quickly outperformed mos…