The Hidden Challenges of AI

Let's discuss about the side effects of this new technology.

Things changed on November 30th, 2022. OpenAI rolled out ChatGPT to the public, marking a pivotal moment in the history of AI. The buzz around this technology has been non-stop – we've seen a flurry of articles, tutorial videos, and use cases demonstrating how it can streamline our daily tasks. I'll admit, I've been captivated by this avalanche of information, which is largely a good thing. However, this might make us lose sight of the bigger picture – the true significance of this new era.

What we've read, tested, and experienced through our endless interactions with AI has left us in awe, so much so that I'd dare to say we've developed a bit of a bias and lost sight of crucial, inherent human aspects like freedom of thought. This insatiable thirst for more from these models often overshadows the critical thinking we all possess. Don't get me wrong – I'm not opposing the development of current and future technologies and innovations. I'm simply advocating for discussions about the side effects or collateral impacts of this new technology.

With this in mind, I'd like to highlight three key points. My goal is to encourage you to become active, critical participants in the realm of AI.

1. Smarter models, but less human touch

Work is where we, as humans, spend a significant portion of our time, employing various strategies to achieve personal successes or to advance a company's goals. In this regard, technology has become an invaluable and, frankly, inescapable ally if we aim to optimize time and resources. That’s why, in my view, one of the major draws for all of us who regularly use AI models is the genuinely positive impact of applying them across different professional and personal domains. Everything seems fine on the surface. However, it's also worth pondering just how much of our work is now being done by some form of AI, and how much influence it’s gaining without our full awareness.

It wasn't until recently that I realized certain tasks were being completed almost instantaneously, tasks in which I used to play an active role – such as conceptualizing solutions, proposing alternatives, and bringing final development to various situations in a typical office day. I'm not suggesting that ChatGPT or other chatbots have completely replaced me, but I do see how their ability to respond to complex issues is growing. They learn, and they do it faster and faster, making decisions on our behalf before we even realize it. Stopping this advancement is not only impossible, it's not something I desire. I like the idea of having a 'second brain' through AI, but I believe it should always remain in a supportive role

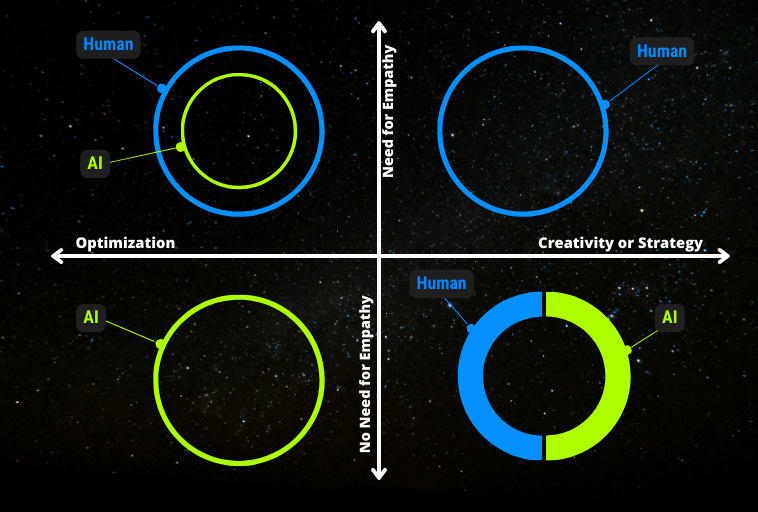

It’s pretty clear by now that AI can handle routine tasks with greater efficiency. Yet, it's crucial to consider that AI, at least for the time being, doesn't possess creativity or empathy. It also lacks the capacity to engage with humans in a way that builds trust. The more a job relies on empathy or creativity, the less likely it is that AI will step in to replace human roles in those areas. To put this into perspective, I'll outline a framework to shed light on this.

Constructing the aforementioned framework was unthinkable just a few years ago when we weren't yet inundated with so many intelligent models.

Reliance on AI might become a new commodity, a necessity we have to embrace … much like the internet was in its time.

One of the conjectures about AI in the mid-20th century was its potential to replicate human consciousness. This meant not just imitating human cognitive abilities like learning and problem-solving, but also recreating consciousness itself – encompassing subjective experience and self-awareness that characterizes humans. While these intelligent models, which we use almost daily, are still not on par with our human nature, it's overwhelming and quite sensational how they handle many activities humans once enjoyed, even though it sometimes came at the cost of competitiveness and the much-vaunted productivity

It's clear that as human beings, we're often driven by fear. So, what exactly is the fear or threat posed by superintelligence?

Pushing aside the doomsday scenarios we've seen in sci-fi flicks like 'Terminator' or 'The Matrix', I've got a couple of questions:

Why are we expecting AI to get even smarter? I'm talking about the buzz and anticipation around the upcoming enhancements in GPT-4 or Google’s Gemini model.

And, why are we entrusting so much power to AI? This question comes from the realization that, in the absence of consciousness, these brainy models could potentially turn the tables on us.

Actually, I think the answer is more straightforward than you might expect. AI models should have been programmed with goals that match ours right from the start. This is crucial, especially as AI systems grow more self-reliant and adept at making their own decisions. Just imagine a scenario where, in the pursuit of a specific goal (which could be as simple as responding to a prompt), these models bypass ethical boundaries.